Big Data

We construct intelligent enterprise big data infusion with prebuilt value driven cloud applications, and a comprehensive portfolio of infrastructure and cloud platform service. We help organizations securely automate operations, drive innovation, make smarter and besuited decisions.

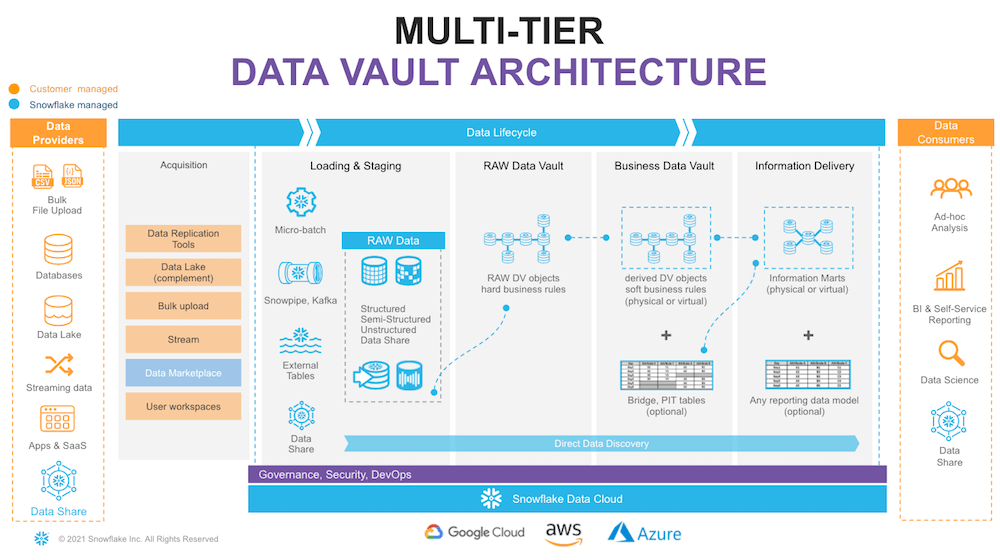

Data Vault

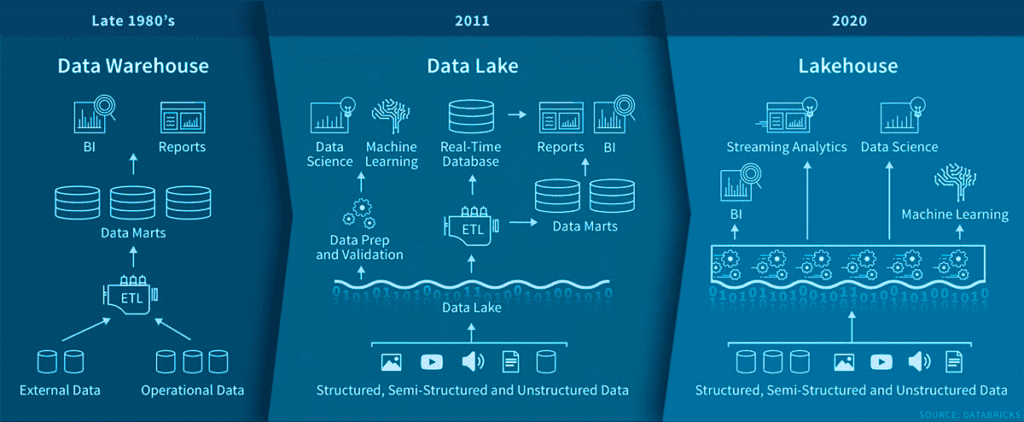

Lake House

A data lakehouse is a new, open data management architecture that combines the flexibility, cost-efficiency, and scale of data lakes with the data management and ACID transactions of data warehouses, enabling business intelligence (BI) and machine learning (ML) on all data.

Data Wrangling

Data wrangling is the process of cleaning, structuring and enriching raw data into a desired format for better decision making in less time. Data wrangling is increasingly ubiquitous at today’s top firms. Data has become more diverse and unstructured, demanding increased time spent culling, cleaning, and organizing data ahead of broader analysis. At the same time, with data informing just about every business decision, business users have less time to wait on technical resources for prepared data.

This necessitates a self-service model, and a move away from IT-led data preparation, to a more democratized model of self-service data preparation or data wrangling. This self-service model with data wrangling tools allows analysts to tackle more complex data more quickly, produce more accurate results, and make better decisions. Because of this ability, more businesses have started using data wrangling tools to prepare before analysis. Hexalytics’ experts help to create radical productivity for people who analyze data. We’re deeply focused on solving for the biggest bottleneck in the data lifecycle, data wrangling, by making it more intuitive and efficient for anyone who works with data.

API

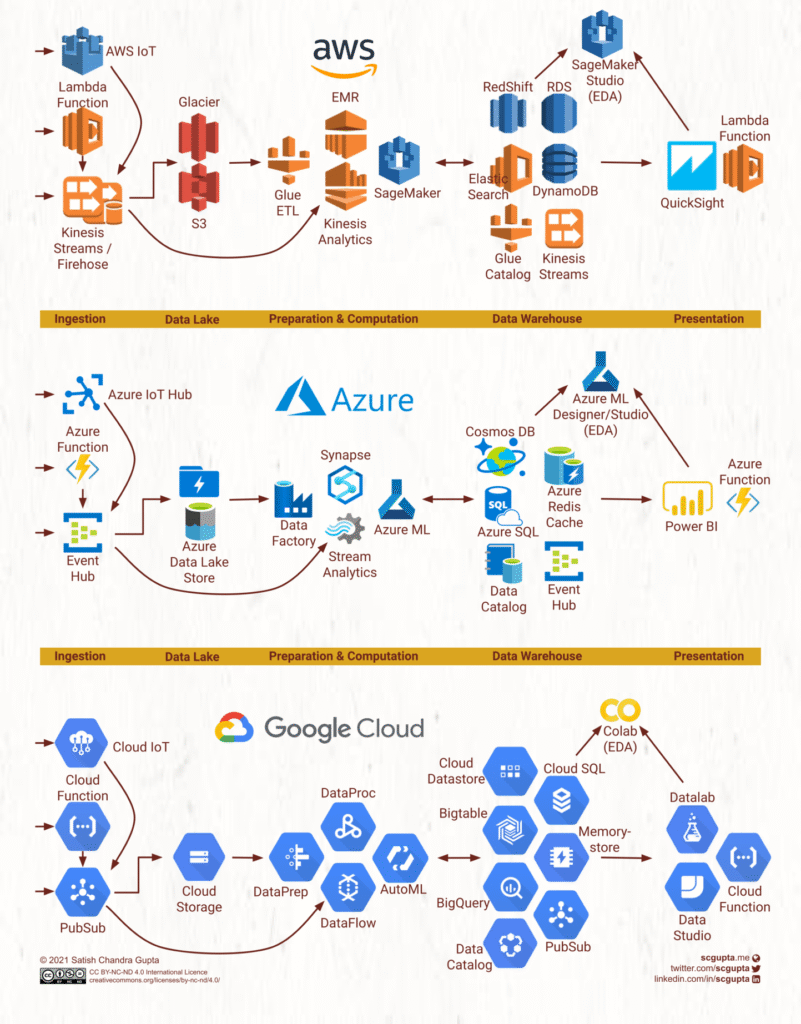

We construct intelligent enterprise big data infusion with prebuilt value-driven cloud applications, and a comprehensive portfolio of infrastructure and cloud platform service. We help organizations securely automate operations, drive innovation, make smarter and besuited decisions.

Connect

Meet our partners

Delivering value-added to our clients through trusted, world-renowned partners. Our collaboration and partnership with these companies allow us to provide high-end resources and expertise, fulfilling our mission of innovation through data.