Digital adoption has accelerated quickly in recent years. New systems have been deployed, data sources have multiplied, and teams now rely heavily on digital processes. Yet despite this progress, many organizations still struggle with data quality, consistency, and trust. Gartner notes that more than 72% of enterprises face challenges with the reliability of their data, not because they lack information, but because their underlying architecture wasn’t built for the speed, scale, and complexity of today’s environment.

Traditional data models were designed for simpler times when data moved slowly, systems were fewer, and reporting expectations were basic. As companies expand across platforms, markets, and channels, old architectures strain under the weight of real-time analytics, unified reporting, and AI-driven workloads. This is where flexible, multi-layer data models have emerged as the foundation for modern enterprise data transformation and long-term scalability.

Why Traditional Approaches Are No Longer Enough

Enterprise data environments are evolving rapidly, and three major trends are exposing the limitations of legacy architectures.

Rising Data Volume and Complexity

IDC forecasts that global data creation will surpass 221 zettabytes by 2026. Storing that data is no longer the issue the real challenge lies in managing, governing, and preparing it for analytics, real-time reporting, and AI-driven use cases. Legacy systems struggle to maintain structure, lineage, and consistency at this scale.

Growing Fragmentation Across Systems

Most organizations rely on a mix of CRM platforms, ERP systems, operational tools, industry applications, marketing platforms, and spreadsheets. Each generates its own structure, definitions, and business logic. This fragmentation leads to conflicting KPIs, mismatched dashboards, duplicated effort, slower reporting cycles, and limited trust in analytics.

The Shift Toward Real-Time and AI-Driven Workflows

Operational teams need insights in the moment, not after weekly or monthly reports. AI and machine learning require clean, well-modeled, high-quality data, which traditional architectures were not built to provide. Data architecture must evolve to support real-time analytics, strong governance, and AI-ready pipelines.

Why Multi-Layer Architecture Has Become Essential

To meet modern business needs, organizations are adopting the multi-layer data model — a layered, modular, scalable architecture that improves reliability, consistency, and performance. These three reasons capture why it is becoming the standard for enterprise data modernization.

1. It Brings Order to Complexity and Creates a Reliable Data Foundation

As organizations grow, their data landscape becomes more fragmented with multiple systems, formats, pipelines, and business rules. A multi-layer model introduces the structure where it is needed most.

The Raw layer preserves source data for traceability and lineage. The Curated layer standardizes, validates, and cleans data. The Semantic layer delivers business-ready, KPI-aligned insights that everyone can rely on.

This layered approach reduces chaos, improves data quality, and creates a predictable foundation that scales with future use cases including real-time analytics and AI.

2. It Ensures Consistent, Trusted KPIs Across the Enterprise

KPI inconsistency is one of the biggest blockers to becoming a data-driven organization. Different teams define revenue, cost, margin, or customer metrics differently, creating misalignment and confusion.

A multi-layer architecture centralizes business logic in the semantic layer, creating a unified source of truth for all KPIs. This ensures consistent definitions, aligned reporting, improved data trust, and faster decision-making across the organization.

3. It Prepares the Organization for Real-Time Analytics and AI

Modern businesses need fast, accurate, and actionable insights. Real-time dashboards, automated alerts, and AI-driven workflows rely on clean, consistent, and well-modeled data.

A multi-layer architecture supports this by providing low-latency processing pipelines, high-quality curated datasets, and structured semantic models. Instead of retrofitting AI onto messy data ecosystems, the entire platform becomes AI-ready by design.

Conclusion

A flexible, multi-layer data model is no longer a technical upgrade — it is the architectural backbone of a modern, insight-driven enterprise. It brings structure to complex environments, restores consistency where fragmentation once existed, and creates a stable foundation for real-time analytics, data governance, and AI. For organizations looking to modernize, scale, and unlock the full value of their data, this approach offers a clear and practical path. Those who invest in strong data foundations today will be the ones who adapt faster, innovate sooner, and lead with greater clarity tomorrow.

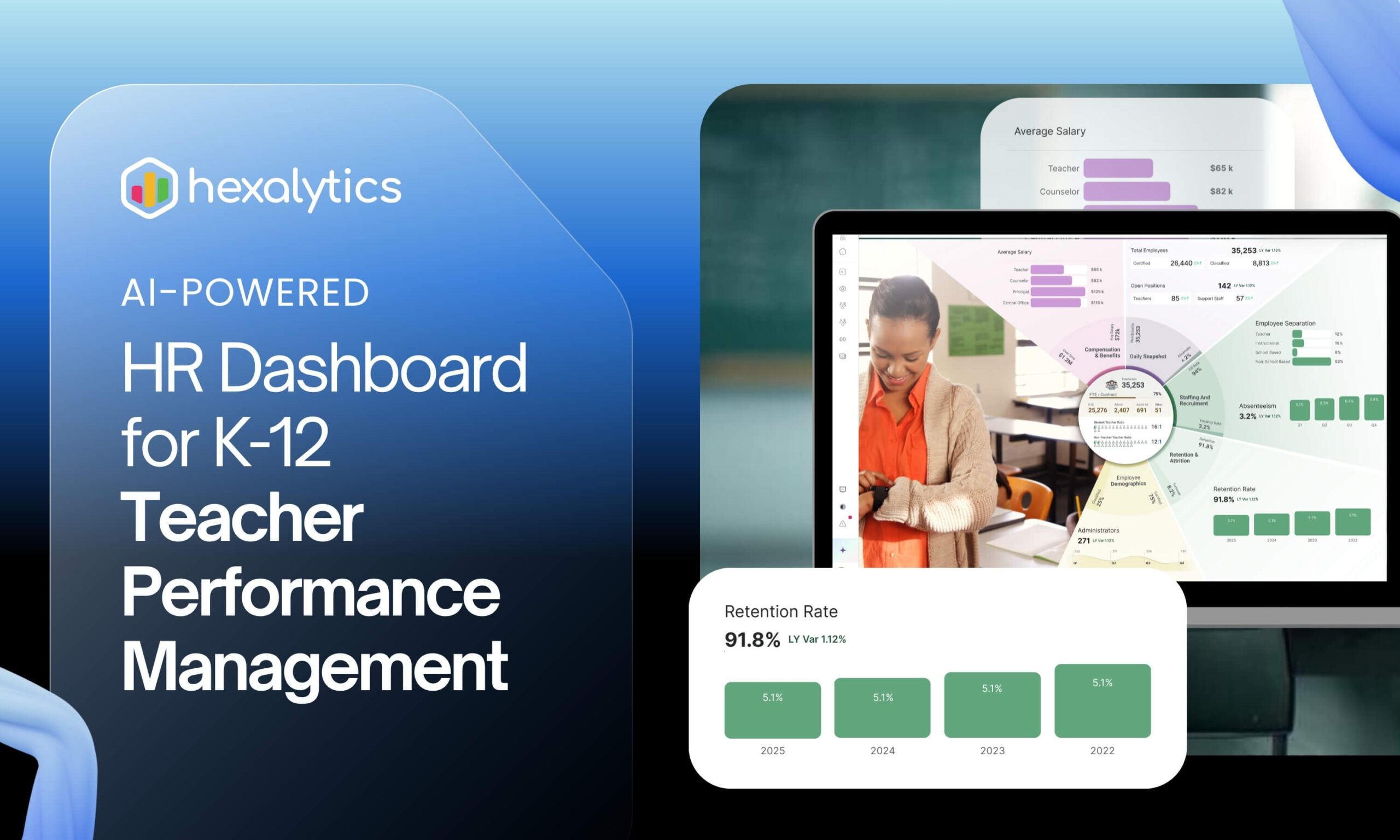

About Hexalytics

Hexalytics is a modern data engineering and analytics consultancy with over a decade of experience helping organizations build scalable, trusted, and AI-ready data ecosystems. We simplify complex data environments through architecture-led design, multi-layer data modeling, lakehouse frameworks, and unified semantic layers that support consistent KPIs and real-time insights. Our vendor-neutral approach and end-to-end capabilities across strategy, engineering, governance, and analytics enable organizations to modernize their data platforms, improve decision-making, and turn information into a driver of growth.

Ready to modernize your data foundation? If your organization is exploring data modernization or looking to build a stronger, more scalable data foundation, we can help.Schedule a discussion with the Hexalytics team to assess your current landscape, explore the benefits of a multi-layer data model, and map out a practical path forward.